Face-matching flaw outlined

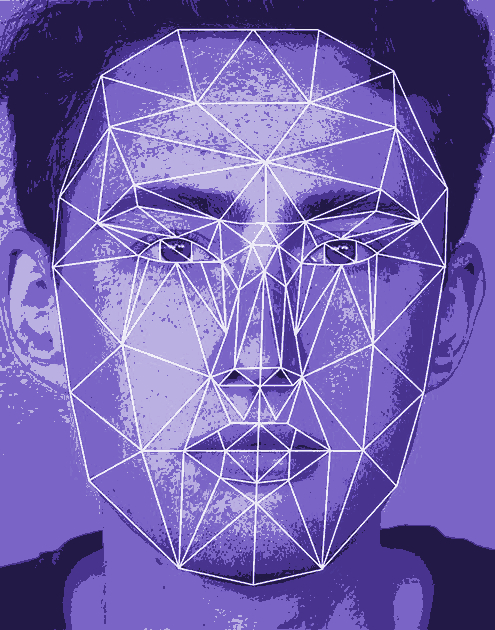

Australia’s new face-matching system will be prone to misreading non-Caucasian faces, experts say.

Australia’s new face-matching system will be prone to misreading non-Caucasian faces, experts say.

Federal and state governments are in the process of building a vast new database of information from driver’s licences, passports, visas and other sources.

The system is designed to be able to identify people in near real-time “to identify suspects or victims of terrorist or other criminal activity, and help to protect Australians from identity crime”, the officials say.

Academics, lawyers, privacy experts and human rights groups fear the system will erode privacy rights and quell public protest and dissent.

Australia’s system is based in part on one used by the US Federal Bureau of Investigation (FBI), which has previously been shown to be prone to error against African Americans.

“[Facial recognition technology] has accuracy deficiencies, misidentifying female and African American individuals at a higher rate,” a US full house committee found last year.

“Human verification is often insufficient as a backup and can allow for racial bias.”

Professor Liz Jackson - an expert on forensic and biometric databases at Monash University - says facial recognition systems often reflect the biases of the societies they were developed in.

“What seems to be the case is the algorithms are good at identifying the people who look like the creators of the algorithm,” she told the Guardian.

“What that means in the British and Australian context is it’s good at identifying white men.”

“It’s not that there’s an inherent bias in the algorithm, it’s just the people on who the algorithms are being tested is too narrow.”

Print

Print